Spark Architecture

Spark’s component architecture supports cluster computing and distributed applications. This guide will not focus on all components of the broader Spark architecture, rather just those components that are leveraged by the Incorta platform.

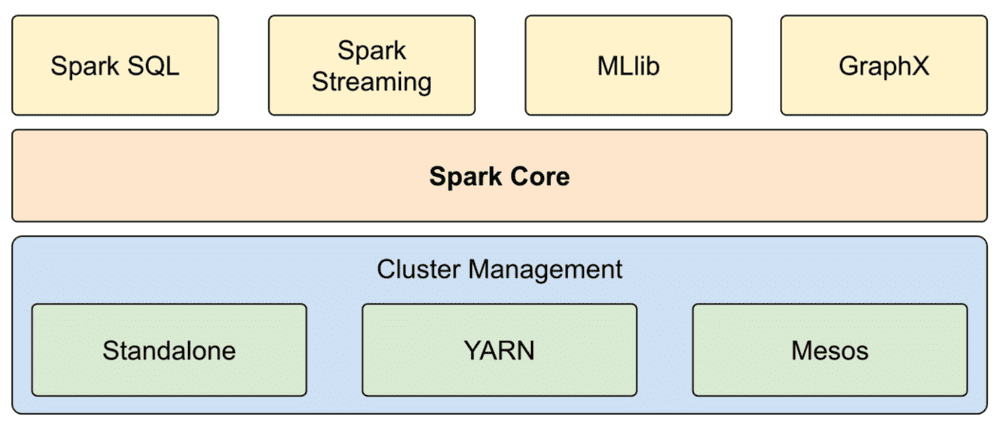

Spark Core

Spark Core contains basic Spark functionality. The core engine powers multiple higher-level components specialized for various workloads. This includes components for task scheduling, memory management, storage systems, and fault recovery. Spark Core is in the org.apache.spark package.

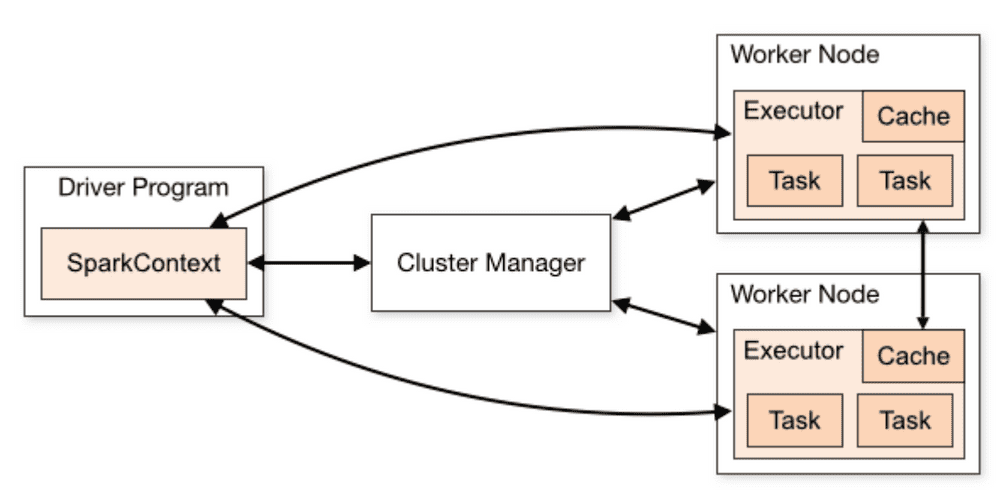

SparkContext

The SparkContext is the entry point to the Spark Core. SparkContext sets up internal services and establishes a connection to a Spark execution environment. Once created, use the SparkContext to create RDDs, broadcast variables, access Spark services, and run jobs. You can use the same SparkContext throughout the life of an application or until it is terminated.

The Incorta platform is responsible for invoking the SparkContext for each task that Incorta requests of Spark.

Spark SQL

The Spark SQL interfaces provide Spark with more information about the structure of both the data and the computation being performed. org.apache.spark.sql is the package. In short, Spark SQL is for structured data processing. Spark SQL allows developers to intermix SQL queries with the programmatic data manipulations supported by RDDs, DataFrames, and Datasets, all within a single application, thus combining SQL with complex analytics.

Incorta leverages the Spark SQL libraries heavily either for Materialized View definition via SQL syntax or through the Incorta SQL Interface. Both Materialized Views and the Incorta SQL interface are discussed in more detail in other sections.

DataFrames

A DataFrame is conceptually equivalent to a table in a relational database or a data frame in R/Python. DataFrames have many optimizations in Spark. You can construct DataFrames from a wide array of data sources, such as structured data files, tables in Hive, external databases, or existing RDDs. In many cases, you perform the same operations on a DataFrame as normal RDDs. You can also register a DataFrame as a temporary table. Registering a DataFrame as a table allows you to run SQL queries over its data. A DataFrame is a Dataset organized into named columns. In Scala, a DataFrame becomes a type alias for Dataset[Row].

MLlib

MLlib is Spark’s Machine Learning (ML) library. With Spark 2.0, the primary ML library is the org.apache.spark.ml package which support for DataFrames. MLlib mostly provides multiple types of machine learning algorithms, including classification, regression, clustering, and collaborative filtering, as well as supporting functionality such as model evaluation and data import.

Cluster Management

To run on a cluster, the SparkContext connects to a cluster manager. The cluster manager allocates resources across applications. This includes tracking resource consumption, scheduling job execution, and managing the cluster nodes.

Although there are other ways to deploy Spark, Spark supports three main cluster manager configurations: the included Standalone cluster manager, Apache Hadoop YARN, and Apache Mesos.

The Driver node is the machine where the Spark application process runs and is often referred to as the Driver process. The Driver process creates the SparkContext. The Driver program uses the SparkContext to connect to the cluster manager. The Incorta platform creates the Driver program on the Incorta server.

When the Driver process needs resources to run jobs and tasks, it asks the Spark Master node for resources. The Master nodes allocates the resources in the cluster to the Worker nodes. This creates an Executor on the Worker node for the Driver process. In this manner, the Driver can run tasks in the Executor for the Worker node. A Worker node is any node that can run application code in the cluster.

Standalone Spark

Spark includes the Standalone cluster manager. By defining containers for Java Virtual Machines (JVMs), the Standalone manager makes it easy to set up a cluster. In this mode, the Cluster Manager runs on the Master node and all other machines in the cluster are considered Worker nodes. Here, Spark uses a Master daemon which coordinates the efforts of the Workers, which run the Executors.

When installing Spark which comes bundled with Incorta 4, Standalone cluster management is leveraged.

Standalone mode requires each application to run an Executor on every node in the cluster. The Spark Master node houses a resource manager that tracks resource consumption and schedules job execution. The resource manager on the Master node knows the state of each Worker node including, for instance, how much CPU and RAM is in use and how much is currently available.