Running Spark Applications

Multiple Spark applications can run at once in a Spark cluster. If you decide to setup Incorta to integrate with an existing Spark cluster managed by Spark on YARN, you can decide on an application-by-application basis whether to run in YARN client mode or cluster mode.

Data Locality

Data locality can have a major impact on the performance of Spark jobs. If data and the code that operates on it are together then computation tends to be fast. But if code and data are separated, one must move to the other. Typically, it is faster to ship serialized code from place to place than a chunk of data because code size is much smaller than data. Spark builds its scheduling around this general principle of data locality.

Data locality is how close data is to the code processing it. There are several levels of locality based on the data’s current location. In order from closest to farthest:

PROCESS_LOCALdata is in the same JVM as the running code that performs the fastest.NODE_LOCALdata is on the same node but not in the same JVM.NO_PREFdata has no locality preference and data is accessed equally and quickly from anywhere.RACK_LOCALdata is on a different server on the same rack so needs to be sent over the network switch for the server rack.ANYdata is elsewhere on the network and not in the same rack.

When possible, Spark scheduled all tasks at the best locality level. In situations where there is no unprocessed data on any idle executor, Spark switches to lower locality levels. Spark will wait until a busy CPU frees up to start a task on data on the same server or will immediately start a new task in a place that requires moving data there.

Process an application in cluster mode

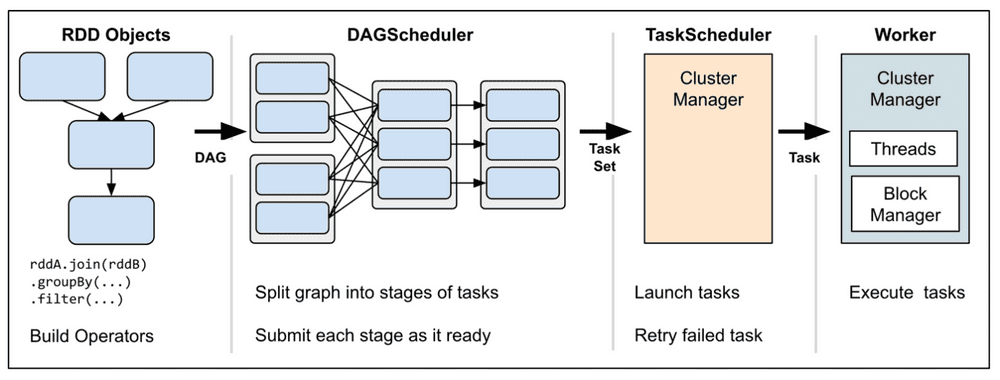

The following diagram illustrates the steps for how Spark in a cluster mode processes application code:

Proccess an application in cluster mode by using the following steps:

- From the Driver process, SparkContext connects to a cluster manager (Standalone, YARN, or Mesos).

- For an application, Spark creates an operator graph.

- The graph is submitted to a DAGScheduler. The DAGScheduler divides the operator graph into (map and reduce) stages.

- A stage is comprised of tasks based on partitions of the input data. The DAGScheduler pipelines operators together to optimize the graph. The DAG scheduler puts map operators in a single stage. The result of a DAGScheduler is a set of stages.

- The stages are passed on to the TaskScheduler. The TaskScheduler launches tasks via cluster manager, such as Spark Standalone, YARN, or Mesos. The task scheduler is unaware of dependencies among stages.

- The Cluster Manager allocates resources across the other applications as required.

- Spark acquires Executors on Worker nodes in the cluster so that each application will get its own executor processes.

- SparkContext sends tasks to the Executors to complete. This is typically the application code that is Scala, Java, Python, or R.

- The Worker executes the tasks. A new JVM is started per job.