Spark Application Model

A Spark application is a self-contained computation that runs user-supplied code to compute a result. The highest-level unit of computation in Spark is an application.

When creating a Materialized View in Incorta, for example, the SQL or Python code that defines the Materialized View gets translated into code which becomes the Spark application.

Spark applications use the resources of multiple hosts within a Spark cluster. As a cluster computing framework, Spark schedules, optimizes, distributes, and monitors applications consisting of many computational tasks across many worker machines in a computing cluster.

A Spark application can be used for a single batch job, an interactive session with multiple jobs, or a long-lived server continually satisfying other application requests. Spark applications run as independent sets of processes on a cluster. It always consists of a driver program and at least one executor on the cluster. The driver process creates the SparkContext for the main Spark application process.

Execution Model

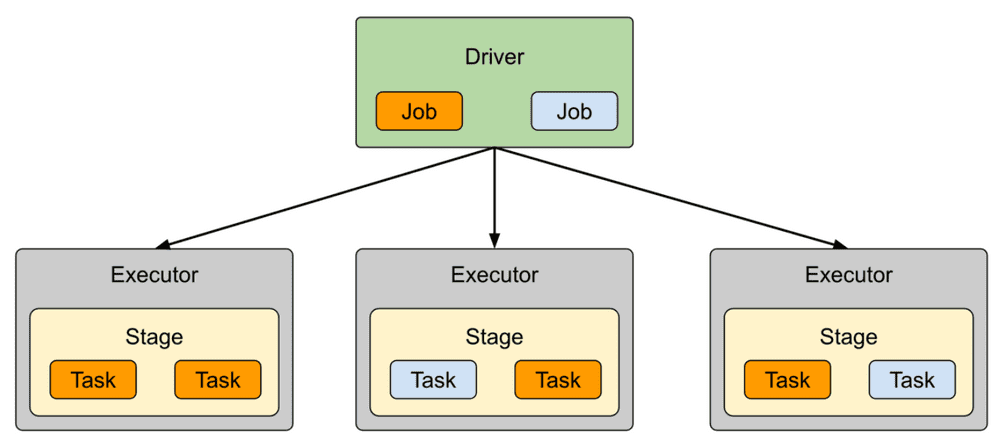

The execution model consists of runtime concepts: driver, executor, job, stage, and task. Each application has a driver process that coordinates its execution, which in most cases results in Spark starting executors to perform computations. Depending on the size of the job, there may be many executors, distributed across the cluster. After loading some of the executors, Spark attempts to match tasks to executors.

Driver

At runtime, a Spark application maps to a single driver process and a set of executor processes distributed across the hosts in a cluster. The driver process manages the job flow and schedules tasks. While the application is running, the driver is available. The process runs the main() function of the application and creates the SparkContext.

The driver process runs in a specific deploy mode, which distinguishes where the driver process runs. There are two deploy modes: client and cluster.

In client mode, the driver process is often the same as the client process used to initiate the job. In other words, the submitter launches the driver outside of the cluster. When you quit the client, the application terminates because the driver terminates. For example, in client mode with spark-shell, the shell itself is the driver process. When you quit spark-shell, the Spark application and driver process terminates. When you stop a job from the Incorta application, the driver process terminates.

In cluster mode, the framework launches the driver inside of the cluster. For example, when run on YARN or Mesos, the driver typically runs in the cluster. For this reason, cluster mode allows you to logout after starting a Spark application without terminating the application.

Stop materialized view jobs from the Incorta application instead of the Spark Web UI so both the materialized view job and the driver process stops at the same time. If you are in the process of saving a materialized view, canceling the save action may not stop the driver process and you may need to kill it from the Spark Web UI.

Executor

An application via the driver process launches an executor on a worker node. The executor runs tasks and keeps data in memory or on disk. Each application has its own executors.

An executor performs work as defined by tasks. Executors also store cached or persisted data. An executor has a number of slots for running tasks, and will run many tasks concurrently throughout its lifetime. The lifetime of an executor depends on whether dynamic allocation is enabled. With dynamic allocation, when an application becomes idle, its Executors are released and can be acquired by other applications.

For most workloads, executors start on most or all nodes in the cluster. In certain optimization cases, you can explicitly specify the preferred locations to start executors using the annotated Developer API.

Job

A job is comprised of a series of parallel computing operators that often runs on a set of data. Each job gets divided into smaller sets of tasks called stages that are sequential and depend on each other.

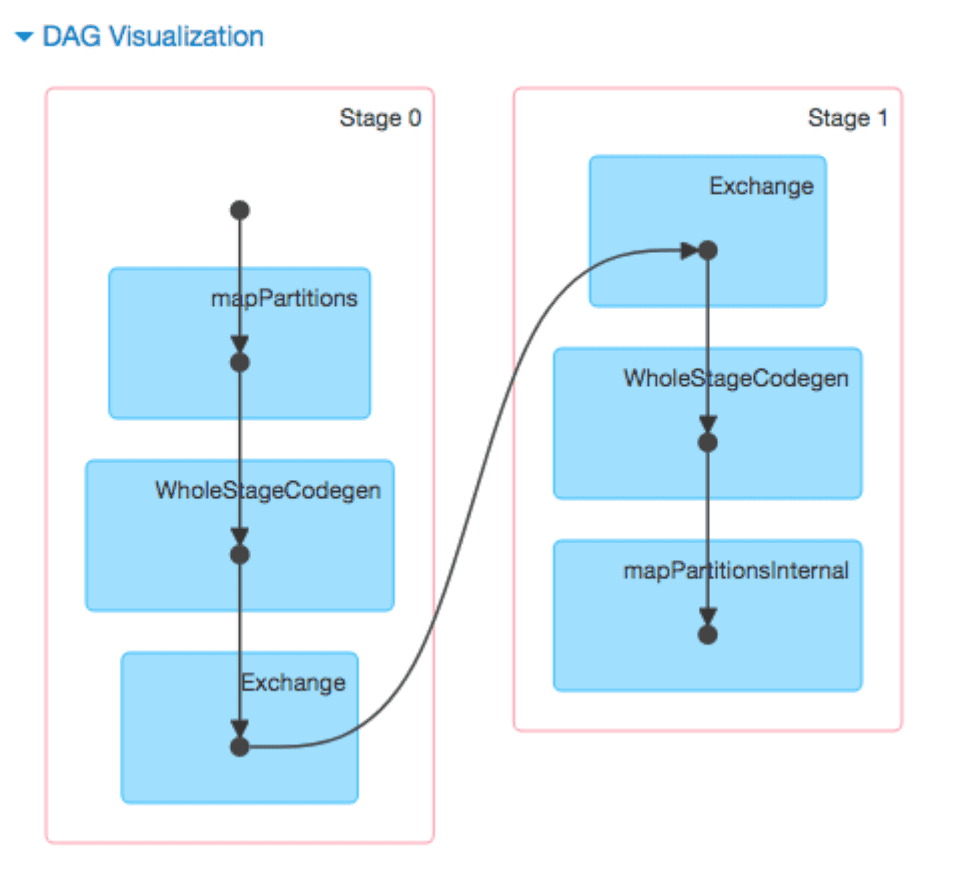

Spark examines the dataset on which that action depends and formulates a Directed Acyclic Graph (DAG), an execution plan, for all the operator tasks in a job. Where possible, Spark optimizes the DAG by rearranging and combining operators. For example, if a Spark job contains a map operation followed by a filter operation, the DAG optimization re-orders the operators so that the filtering operation first reduces the dataset before performing the map operation.

In the Spark Web UI, for a given Job, you can view the DAG Visualization.

Stage

A stage is a collection of tasks that run the same code, each on a different subset of the data. The execution plan assembles the dataset transformations into one or more stages.

Tasks

A task is a fundamental unit of a job that an executor runs.